Here I will show how I setup a simple system for wedging simulations in Houdini and outputting a mosaic mpeg of the results with parameter values displayed in the frame.

There were a few tricky gotchas but I will show you how I got past them.

What is wedging?

Imagine you are creating a Pyro explosion, there are a lot of parameters that can be varied to produce different end results. Creating a separate simulation and render for each value of a parameter, then compositing them side by side is one way to compare the effects of changing that paramater, but this would be very laborious and time consuming. Then imaging is you wanted to vary multiple parameters. The task then becomes extremely tedious.

Wedging is a quick way of testing the effect of a parameter on the outcome of a simulation.

This has been implemented in Houdini since the year dot, but now Houdini has TOPs, which makes the setup even more efficient.

Workflow:

- Decide what parameters you want to vary

- Create Wedge TOP nodes to create those variations

- If you are varying multiple parameters, then create multiple Wedge TOPs and chain them together

- Cache your simulation using the ROP_Geometry TOP

- Render each of those simulations using the ROP_Mantra TOP

- Composite text overlay using ROP_Composite_Output TOP

- Use Partition_by_Frame TOP to gather all your frames into groups which have the same frame number

- The Imagemagick TOP will create a montage of your frames

- Use the Wait_for_All TOP before encoding the output video

- Output a mpeg of the results using the FFMPEG_Encode_Video TOP

There are a couple of things you need before you can get started: Imagemagick and ffmpeg.

Install these as per their instructions.

OK, Let's get started setting up this workflow.

{I am following Steve Knipping's Volumes II (version 2), so you may recognise some of this}

Here is my simple Pyro setup:

I am sourcing Density, Velocity and Temperature from a deformed sphere - like so:

The Pyro simulation is equally faithfull to Mr Knipping's tutorial - like so:

At this point I decided to try the Wedging, so I diverted away from the Knipping and focussed on the TOP side.

I did need to import the Pyro simulation back into SOPs, so here is my seup for that. Just a DOP_Import_Fields and a Null.

Now, we dive into TOPs

Firstly, in the top level, create a TOP network and dive inside.

Once inside TOPs, make a Wedge TOP node

I chose to wedge a couple of paramaters: Disturbance Reference Scale (shown above) and Disturbance Strength.

So I created two Wedge TOPs and chained them together.

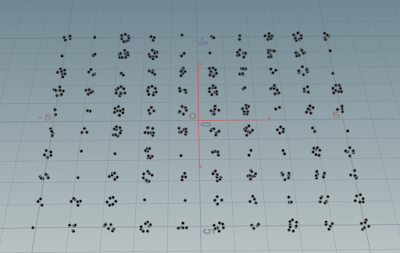

I have 4 values for Disturbance Scale and 4 values for Disturbance Strength, making 16 variations in total.

Now to cache these simulations out to disk.

Use the ROP_Geometry_Output node to cache the simulation.

Here is the first important gotcha:

In the ROP Fetch tab, make sure to enable 'All Frames in One Batch' because this is a simulation and each frame must be calculated in order.

This is where you set the frame range of the simulation. When you render, the renderer will take each frame seperately and render them (or in chunks).

The SOP path points to the output of the Pyro simulation, which is brough back into SOPs with the DOP_Import_Fields node.

The Output File uses an attribute called @wedgeindex, which is a string, so you have to use back-ticks (i.e. `@wedgeindex') to let Houdini evaluate it's value.

@wedgeindex identifies which wedge you are currently simulating or rendering. It will be the same value for each rendered or simulated geometry file for that one wedge.

So, we will end up with a lot of geometry files, but they will be named using the @wedgeindex tag.

Now we have the geometry files, we need to render them.

Make a ROP_Mantra_Render TOP node and connect it to the previous node.

This time you can render frames out of order or in chunks - it doesn't matter. So in the ROP Fetch tab, you can set Frames per Batch to a larger number to reduce the load on the network.

Again, we need to use the `@wedgeindex` attribute in the output file name.

I would recommend checking the output frame size because if you are making a mosaic of full frame renders, the resulting mpeg could become enourmous - 16k or larger. I chose to render at 1/3 size.

OK, now we have a lot of rendered frames. It's a good idea to label them with which wedged attributes they are using. There is little point having a lovely mosaic of pyro sims if you don't know which version of the wedged attributes your favourite one refers to. I discovered on the SideFX forum that there is a

very clever solution to this problem, which involves a COP network.

Don't worry, I will give you the details here.

Create a Overlay_Text TOP node.

We want to put a Python script in the Overlay tab, but to do this you have to dive into the node.

Once inside the COP network, select the Font node. This is where the Python script goes.

However just copying the code into the text box will not work. First, convert the text box into a python script box by right-clicking in the text box, choose Expression > Change Language to Python.

Now copy the Python code into the Python text box

import pdg

active_item = pdg.EvaluationContext.workItemDep()

data = active_item.data

attribs = ""

for wedge_attrib in data.stringDataArray("wedgeattribs"):

val = data.stringDataArray(wedge_attrib)

if not val:

val = data.intDataArray(wedge_attrib)

if not val:

val = data.floatDataArray(wedge_attrib)

if not val:

continue

val = round(float(str(val)[1:-1]),3)

attribs += "{} = {}\n".format(wedge_attrib, str(val))

return attribs

The text box will change to a purple colour.

Next, we need to sort the rendered frames by frame number - get all the frame 1's together, frame 2's together, etc. This is done by the Partition_by_Frame TOP node. Drop one down.

Follow it with an Imagemagick TOP node. Set the node to 'Montage'

Gotcha! Have a look at the Output Filename. It references a variable called $PDG_DIR but this variable does not exist in Houdini by default, you need to create it.

So in Houdini's main menu, go to Edit > Aliases and Variables and add the following entries:

We are nearly there.

Before we can encode the mpg, we must wait for all files to be rendered by Imagemagick, so drop down a Wait_For_All TOP node.

Finally, we can encode the images into a video file. Use the FFMPEG_Encode_Video TOP node.

Again, watch out for that $PDG_TEMP and $PDG_DIR variables.

You might also need to set the FFMPEG path to the folder that contains the FFMPEG executable file. I didn't need to do this, but some people might have to if they already had FFMPEG installed before Houdini was installed.

That's all there is to it!

I will attach a

setup file, because something is bound to go wrong.